Written by

Published on

Sep 6, 2024

Topic

Cybersecurity

In today's multi-cloud world, seamless communication between Virtual Private Clouds (VPCs) across different projects is crucial for maintaining a robust and efficient infrastructure. However, VPC peering, while powerful, can introduce a range of hidden challenges that can lead to frustrating timeouts and connectivity issues. These problems often stem from deeper issues such as misconfigured firewalls, Linux IP Tables settings, and failing health checks.

In this post, we'll explore these underlying causes using Google Cloud as our platform and provide insights on how to troubleshoot and resolve them, ensuring your cross-project VPC communication remains smooth and reliable.

Step One: Check the Firewall rules

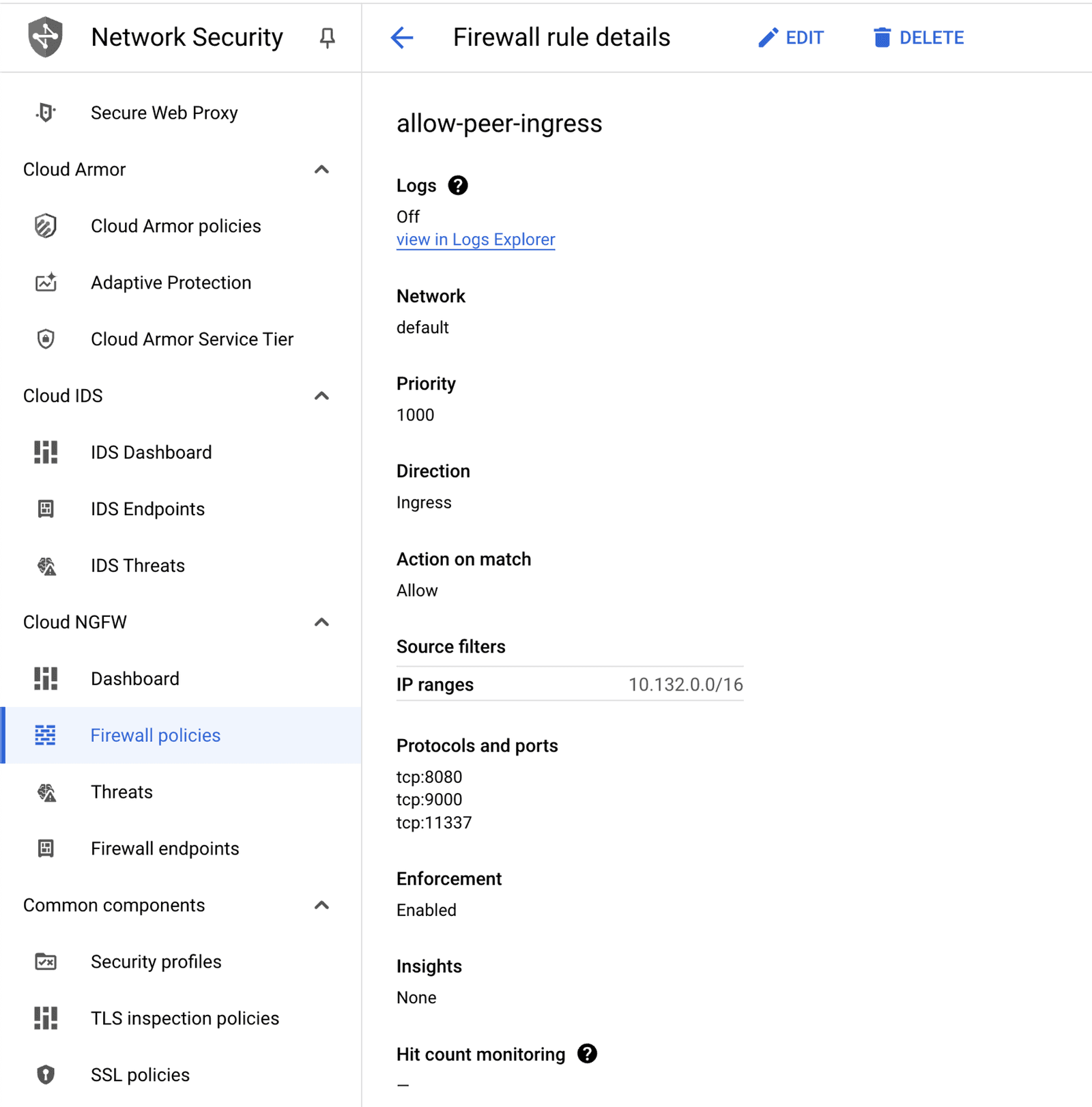

As a first step, each VPC should have firewall rules that specifically allow the IP CIDRs from the other VPC. This means you need to create rules that permit traffic from the entire IP range of the peered VPC. For example, if VPC A needs to communicate with VPC B, you must create an ingress firewall rule in VPC A allowing traffic from the IP range of VPC B, and vice versa. These rules should specify the allowed protocols and ports as required by your application, whether it's HTTP, HTTPS, or any custom ports you might be using.

The above firewall rule allows incoming requests from the CIDR range 10.0.0.0/8 for TCP ports :8080,:9000, and :11337.

The Next Step: Checking IP Tables

Once you have set up the appropriate firewall rules for both VPCs, the next step is to look into the IP tables of your GCE VMs or GKE cluster nodes. IP tables are a crucial part of the Linux kernel's network stack, used to define rules for how incoming and outgoing traffic is handled. Misconfigurations or overly restrictive rules in the IP tables can lead to traffic being blocked or dropped, causing further communication issues between your VPCs.

First, you need to SSH into your GCE VM or GKE node to examine the IP tables. You can use commands like sudo iptables -L -v -n to list the current rules and see how they are set up. Look for any rules that might be blocking the traffic from the IP ranges of your peered VPC. Common issues include default policies set to DROP or specific rules that reject traffic from certain IP addresses or ranges.

If you find any such rules, you will need to modify or remove them to ensure that traffic can flow freely between your VPCs. You can add new rules using the iptables command, specifying the source and destination IP ranges, as well as the necessary ports and protocols. For example, you might use a command like sudo iptables -A INPUT -s <source-ip-range> -p tcp --dport <port> -j ACCEPT to allow traffic from a specific source IP range on a particular port.

Final Step: Verify VPC Peering Health Check

After configuring the firewall rules and IP tables, the final step is to check the VPC peering health status to ensure it is passing. Google Cloud provides VPC peering connectivity tests to verify that the peering connection is healthy and that the VPCs can communicate as expected. This health check is crucial because it can help identify underlying issues that might not be immediately apparent, such as routing problems or misconfigurations that could still cause timeouts or connectivity issues.

To perform this check, navigate to the VPC Network Peering section in the Google Cloud Console. Here, you can find your peered VPC connections and run a connectivity test. The test will assess the connection and report any issues, such as route conflicts or missing firewall rules, that could impede communication. A passing health check indicates that the basic network connectivity between the peered VPCs is functioning correctly.

If the health check fails, it will provide diagnostic information that you can use to troubleshoot further. This might involve reviewing route tables to ensure that the correct routes are in place for the peered networks or revisiting your firewall and IP table configurations to address any overlooked issues.

By completing this health check, you can confidently ensure that your VPC peering setup is fully operational, allowing seamless and reliable communication between your VMs and services across different VPCs. This final verification step helps solidify the stability of your network infrastructure, paving the way for a robust multi-cloud environment.

Caveat: VPC_NATIVE Network Mode for GKE Clusters

It's important to note that for Google Kubernetes Engine (GKE) clusters to work seamlessly with VPC peering, they need to be configured in VPC_NATIVE network mode. This mode, also known as alias IP mode, allows GKE clusters to use a range of IP addresses from the VPC network, simplifying network management and ensuring smoother integration with peered VPCs. Without VPC_NATIVE mode, you might encounter additional complexity and potential issues with network connectivity between your GKE clusters and other VPC resources. Make sure your GKE clusters are set up in this mode to fully leverage the benefits of VPC peering.

If you use Terraform to deploy and maintain your infrastructure, this can simply be done using the following configuration:

Caveat: Calico Network Policy for GKE clusters

When using Calico network policies in your GKE cluster, it's important to be aware that these policies can introduce additional constraints on network traffic, potentially leading to unexpected connectivity issues. Calico allows you to define fine-grained network policies, including egress policies that control outbound traffic from your pods. These policies can inadvertently block traffic necessary for VPC peering to function correctly.

If your GKE cluster is using Calico for network policy enforcement, you need to carefully review and configure egress policies to ensure they allow traffic to the peered VPC. Here are a few key steps to consider:

Review Existing Egress Policies:

Start by reviewing all existing egress policies in your cluster. Ensure there are no policies that block traffic to the IP ranges of the peered VPC. Look for rules that specify deny actions or restrictive CIDR blocks.Create or Modify Egress Policies:

If necessary, create or modify egress policies to explicitly allow traffic to the peered VPC's IP ranges. For example, you can create an egress policy that permits all traffic to the CIDR range of the peered VPC:

Test Connectivity:

After updating the egress policies, test the connectivity from your GKE pods to resources in the peered VPC. Ensure that the necessary traffic flows without interruption, and adjust the policies as needed based on your testing results.

By carefully managing egress policies with Calico, you can prevent them from inadvertently blocking essential traffic between your GKE cluster and the peered VPC. This proactive approach helps maintain reliable cross-VPC communication, ensuring that your network infrastructure functions smoothly.

Wrapping Up: Conquering the VPC Peering Challenge

Mastering VPC peering in Google Cloud involves a journey through firewall configurations, IP tables adjustments, and meticulous health checks. By addressing each of these components, you can overcome the notorious timeout monster and ensure seamless communication across your VPCs. As you fine-tune these settings, you're not only solving immediate connectivity issues but also building a resilient, high-performing network infrastructure ready to handle the demands of a dynamic, multi-cloud world. With these tools and insights, you're well-equipped to conquer the challenges of VPC peering, paving the way for smoother, more reliable cloud operations.

About Oneleet: Your Partner in Cybersecurity and Compliance

At Oneleet, we’re all about making cybersecurity and compliance easy and effective. We help businesses like yours stay on top of industry standards and regulations without all the hassle. Our tools and services ensure your infrastructure is secure by default, so you don’t have to worry about unexpected vulnerabilities. With our comprehensive controls and monitoring solutions, you can focus on what you do best, knowing that your systems are safe and sound. Think of us as your go-to team for keeping your IT environment secure and compliant, effortlessly.

Mohammed Nafees

Engineering Team Lead @ Oneleet

Engineering leader with a passion for coding and technology - I love working with Go, C++, and PostgreSQL, and I'm deeply interested in distributed systems, parallel programming, and infrastructure security.

Check All Other Articles

Continue reading

Mona Zimmermann

Achieve EU DORA Compliance: A Clear Path for SMEs

Dec 16, 2024

Data security doesn’t just protect your secrets from prying eyes—it’s the foundation for securing your business’s future. For VC-backed startups, it’s not just…

Koby Conrad

Data Security: Threats, Solutions, and Best Practices

Sep 24, 2024

Data security doesn’t just protect your secrets from prying eyes—it’s the foundation for securing your business’s future. For VC-backed startups, it’s not just…

Koby Conrad

Data Encryption Explained: Benefits, Methods, Best Practices

Sep 24, 2024

Data encryption is the process of making readable data unreadable so only authorized people can read it. Data encryption software…